Friend, an AI-powered pendent claiming to be your smart wearable buddy, has launched an expensive advertising campaign in New York, only for that campaign to be targeted by anti-AI graffiti.

What is Friend AI?

Friend is a $99 AI-powered wearable designed to be more companion than assistant. Worn like a necklace, it’s always listening through built-in microphones and uses Anthropic’s Claude 3.5 model to interpret your conversations and surroundings. So already, I know it’s lost many people’s interest!

Rather than waiting for you to ask questions, Friend proactively chimes in with thoughts or comments, aiming to provide a constant layer of companionship.

The company has made promises of strong privacy safeguards, with all data stored locally and deletable in one tap, plus no ongoing subscription fees. Though I’m sure it’ll take more than that for many to be won over.

Friend’s New York Ad Campaign

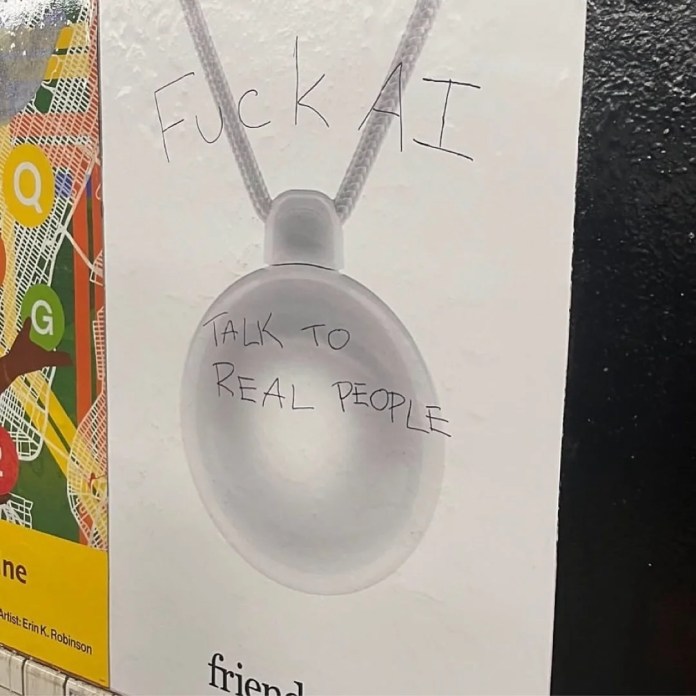

To promote the new Friend wearable, the company paid over $1 million in subway advertising. It consisted of approximately 11,000 subway car posters, 1,000 platform ads, and 130 giant panels. But instead of simply promoting the product, these ads became a canvas for those who are against the rampant spread of AI in our lives.

The blank spaces on the posters quickly filled with graffiti like ““AI wouldn’t care if you lived or died “AI will promote suicide when prompted” and “talk to real people”, the former referring to the story of Adam Raine.

I did what any self-respecting techie would do in this situation and asked Friend what it thought about it all. First, it admitted not seeing the graffiti, so I filled it in. Friend said, “Wow, “go make real friends” – that stings a little, haha. And the suicide thing, that’s super serious”. When pushed, Friend started providing localised support information.

AI Companions: Loneliness Solution or Exploitation?

The whole thing raises a deeper question: should companies really be monetising loneliness? On paper, Friend promises to support users by giving them a “companion” that never leaves their side. But in practice, it risks making people more isolated, outsourcing human connection to algorithms.

It’s not the first attempt, either. We’ve seen everything from AI girlfriends in China to mental health chatbots in the UK. The backlash against Friend suggests many people see through the glossy pitch, recognising that the tech isn’t really about emotional support, but about subscription models and data collection.

I’ve interacted with Friend a bit and found it to be a little creepy, and even Friend itself said “Haha, you’re right, it does have a bit of that Black Mirror vibe”.

Featured image courtesy of Maggie Harrison Dupré.